Think Your Deepseek Is Safe? Three Ways You Possibly can Lose It Today

페이지 정보

본문

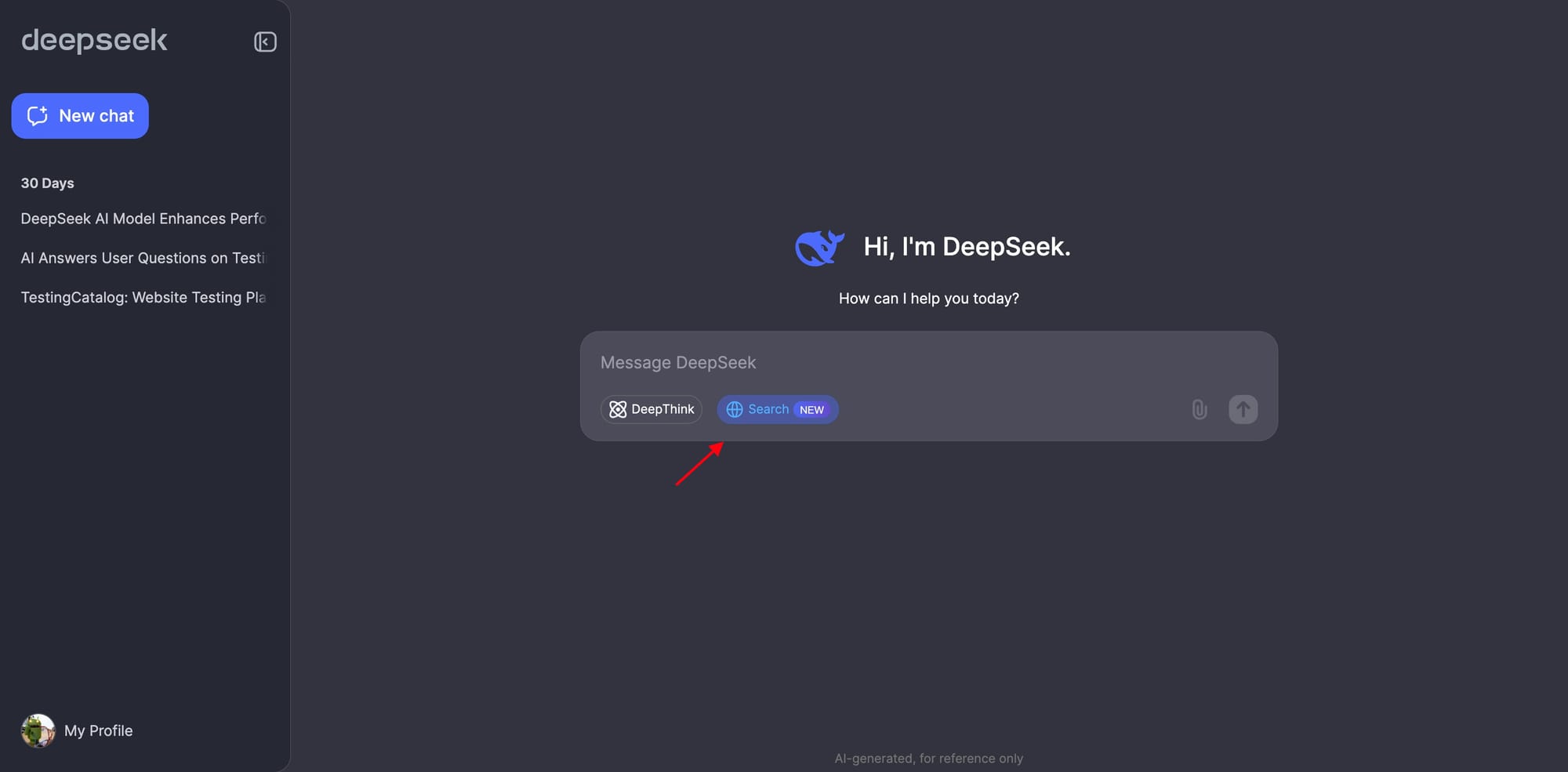

Why is DeepSeek instantly such a giant deal? 387) is a big deal as a result of it exhibits how a disparate group of people and organizations located in different nations can pool their compute collectively to train a single model. 2024-04-15 Introduction The aim of this post is to deep-dive into LLMs that are specialised in code generation duties and see if we can use them to jot down code. For example, the artificial nature of the API updates could not absolutely capture the complexities of real-world code library adjustments. You guys alluded to Anthropic seemingly not having the ability to seize the magic. "The DeepSeek model rollout is main investors to question the lead that US companies have and how a lot is being spent and whether that spending will result in income (or overspending)," stated Keith Lerner, analyst at Truist. Conversely, OpenAI CEO Sam Altman welcomed DeepSeek to the AI race, Deepseek stating "r1 is a powerful model, significantly round what they’re able to deliver for the worth," in a recent submit on X. "We will obviously ship a lot better models and in addition it’s legit invigorating to have a brand new competitor!

Why is DeepSeek instantly such a giant deal? 387) is a big deal as a result of it exhibits how a disparate group of people and organizations located in different nations can pool their compute collectively to train a single model. 2024-04-15 Introduction The aim of this post is to deep-dive into LLMs that are specialised in code generation duties and see if we can use them to jot down code. For example, the artificial nature of the API updates could not absolutely capture the complexities of real-world code library adjustments. You guys alluded to Anthropic seemingly not having the ability to seize the magic. "The DeepSeek model rollout is main investors to question the lead that US companies have and how a lot is being spent and whether that spending will result in income (or overspending)," stated Keith Lerner, analyst at Truist. Conversely, OpenAI CEO Sam Altman welcomed DeepSeek to the AI race, Deepseek stating "r1 is a powerful model, significantly round what they’re able to deliver for the worth," in a recent submit on X. "We will obviously ship a lot better models and in addition it’s legit invigorating to have a brand new competitor!

Certainly, it’s very useful. Overall, the CodeUpdateArena benchmark represents an vital contribution to the ongoing efforts to enhance the code generation capabilities of giant language fashions and make them extra strong to the evolving nature of software development. Overall, the DeepSeek-Prover-V1.5 paper presents a promising approach to leveraging proof assistant feedback for improved theorem proving, and the outcomes are impressive. The system is proven to outperform traditional theorem proving approaches, highlighting the potential of this combined reinforcement learning and Monte-Carlo Tree Search approach for advancing the field of automated theorem proving. Additionally, the paper does not tackle the potential generalization of the GRPO approach to other sorts of reasoning duties beyond mathematics. This innovative strategy has the potential to tremendously speed up progress in fields that depend on theorem proving, akin to arithmetic, laptop science, and beyond. The important thing contributions of the paper embody a novel strategy to leveraging proof assistant suggestions and developments in reinforcement learning and search algorithms for theorem proving. Addressing these areas could additional enhance the effectiveness and versatility of DeepSeek-Prover-V1.5, finally resulting in even larger advancements in the sector of automated theorem proving.

Certainly, it’s very useful. Overall, the CodeUpdateArena benchmark represents an vital contribution to the ongoing efforts to enhance the code generation capabilities of giant language fashions and make them extra strong to the evolving nature of software development. Overall, the DeepSeek-Prover-V1.5 paper presents a promising approach to leveraging proof assistant feedback for improved theorem proving, and the outcomes are impressive. The system is proven to outperform traditional theorem proving approaches, highlighting the potential of this combined reinforcement learning and Monte-Carlo Tree Search approach for advancing the field of automated theorem proving. Additionally, the paper does not tackle the potential generalization of the GRPO approach to other sorts of reasoning duties beyond mathematics. This innovative strategy has the potential to tremendously speed up progress in fields that depend on theorem proving, akin to arithmetic, laptop science, and beyond. The important thing contributions of the paper embody a novel strategy to leveraging proof assistant suggestions and developments in reinforcement learning and search algorithms for theorem proving. Addressing these areas could additional enhance the effectiveness and versatility of DeepSeek-Prover-V1.5, finally resulting in even larger advancements in the sector of automated theorem proving.

This can be a Plain English Papers abstract of a analysis paper known as DeepSeek-Prover advances theorem proving by way of reinforcement studying and Monte-Carlo Tree Search with proof assistant feedbac. It is a Plain English Papers summary of a analysis paper known as DeepSeekMath: Pushing the bounds of Mathematical Reasoning in Open Language Models. The paper introduces DeepSeekMath 7B, a large language model that has been pre-skilled on a large amount of math-associated data from Common Crawl, totaling one hundred twenty billion tokens. First, they gathered a massive amount of math-associated data from the net, including 120B math-related tokens from Common Crawl. First, the paper does not provide an in depth analysis of the sorts of mathematical problems or ideas that DeepSeekMath 7B excels or struggles with. The researchers evaluate the performance of DeepSeekMath 7B on the competitors-level MATH benchmark, and the mannequin achieves a powerful score of 51.7% without relying on exterior toolkits or voting strategies. The results are spectacular: DeepSeekMath 7B achieves a rating of 51.7% on the challenging MATH benchmark, approaching the efficiency of reducing-edge models like Gemini-Ultra and GPT-4. DeepSeekMath 7B achieves spectacular performance on the competitors-level MATH benchmark, approaching the extent of state-of-the-artwork fashions like Gemini-Ultra and GPT-4.

The paper presents a brand new giant language mannequin called DeepSeekMath 7B that is specifically designed to excel at mathematical reasoning. Last Updated 01 Dec, 2023 min learn In a recent development, the DeepSeek LLM has emerged as a formidable pressure within the realm of language models, boasting a powerful 67 billion parameters. Where can we discover giant language fashions? In the context of theorem proving, the agent is the system that is looking for the answer, and the suggestions comes from a proof assistant - a computer program that can verify the validity of a proof. The DeepSeek-Prover-V1.5 system represents a big step ahead in the sphere of automated theorem proving. DeepSeek-Prover-V1.5 is a system that combines reinforcement studying and Monte-Carlo Tree Search to harness the feedback from proof assistants for improved theorem proving. By combining reinforcement studying and Monte-Carlo Tree Search, the system is ready to effectively harness the feedback from proof assistants to guide its seek for options to complicated mathematical issues. Proof Assistant Integration: The system seamlessly integrates with a proof assistant, which gives suggestions on the validity of the agent's proposed logical steps. They proposed the shared experts to learn core capacities that are sometimes used, and let the routed specialists to study the peripheral capacities that are not often used.

If you beloved this article and also you would like to collect more info with regards to ديب سيك kindly visit our own web site.

- 이전글14 Questions You Shouldn't Be Afraid To Ask About Get Diagnosed With ADHD 25.02.02

- 다음글What's The Current Job Market For Private ADHD Diagnosis UK Professionals? 25.02.02

댓글목록

등록된 댓글이 없습니다.